This is the project page for the paper “Impact of Co-occurrence on Factual Knowledge of Large Language Models”. You may refer to the additional materials, including the code, poster and slides.

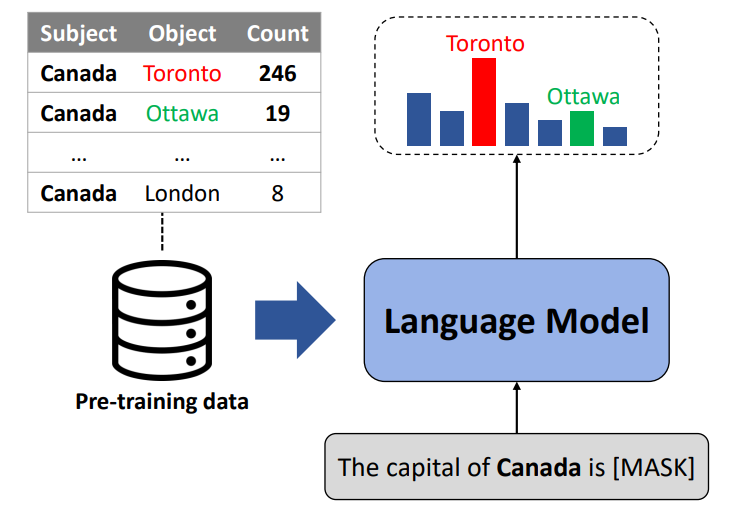

This figure shows an overall framework of our correlation analysis between co-occurrence counts and factual knowledge of LLMs. We assume that if the target model heavily relies on subject-object co-occurrence, it is more likely to recall the most co-occurring word without accurate semantic understanding. For instance, in this hypothetical example, the model fails to answer the question about the capital of Canada by generating the most frequently co-occurring word 'Toronto', while the correct answer is 'Ottawa'. This indicates that relying heavily on co-occurrence statistics may have potential errors.

Abstract

Large language models (LLMs) often make factually incorrect responses despite their success in various applications. In this paper, we hypothesize that relying heavily on simple co-occurrence statistics of the pre-training corpora is one of the main factors that cause factual errors. Our results reveal that LLMs are vulnerable to the co-occurrence bias, defined as preferring frequently co-occurred words over the correct answer. Consequently, LLMs struggle to recall facts whose subject and object rarely co-occur in the pre-training dataset although they are seen during finetuning. We show that co-occurrence bias remains despite scaling up model sizes or finetuning. Therefore, we suggest finetuning on a debiased dataset to mitigate the bias by filtering out biased samples whose subject-object co-occurrence count is high. Although debiased finetuning allows LLMs to memorize rare facts in the training set, it is not effective in recalling rare facts unseen during finetuning. Further research in mitigation will help build reliable language models by preventing potential errors. The code is available at https://github.com/CheongWoong/impact_of_cooccurrence.

Factual Knowledge Probing

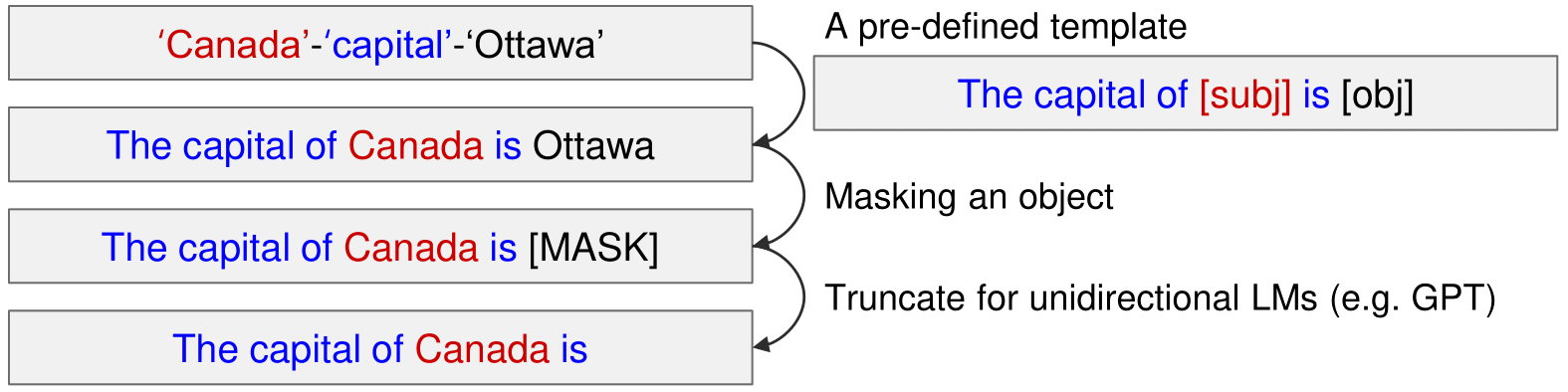

The LAMA Probe

We adopt the LAMA-TREx dataset , which consists of 41 relations, to probe factual knowledge of LLMs. Facts are represented as subject-relation-object triples (e.g. ‘Canada’-‘capital’-‘Ottawa’). Each fact is converted to a natural language form based on a pre-defined set of templates for relations (e.g. “The capital of Canada is Ottawa.”). Then, it is converted to a Cloze statement by masking an object (e.g. “The capital of Canada is [MASK]”). To query unidirectional LMs, we use a sentence truncated right before the mask token (e.g. “The capital of Canada is”).

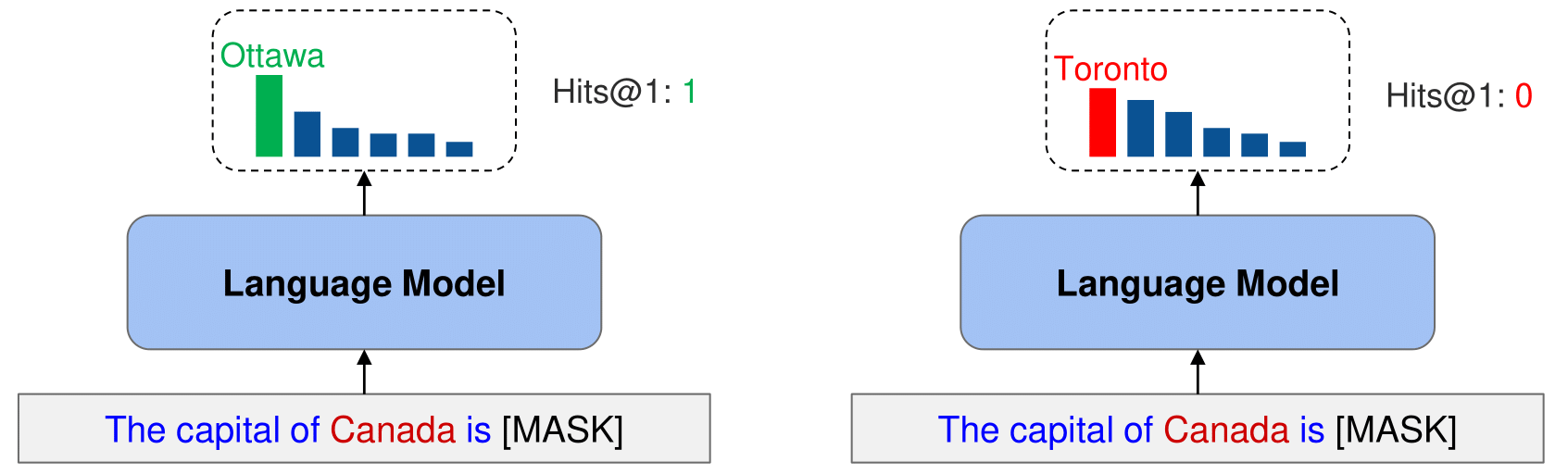

Metrics

Following the knowledge base completion literature , we use hits@1 to evaluate the performance on factual knowledge probing. Hits@1 is 1 if the correct answer is ranked in the top 1 prediction, otherwise 0. When computing hits@1, we remove other valid objects for a subject-relation pair other than the one we test.

Restricted Candidate Sets

An example of restricting candidate sets (remove stopwords).

Since LLMs are not trained to act as knowledge bases, we use restricted output candidate sets following the recent work . Specifically, we use three different settings to restrict output vocabularies to study whether LLMs are capable of recognizing appropriate object candidates or not: (1) remove stopwords, (2) gold objects and (3) gold objects (relation-wise). The remove stopwords simply removes stopwords in the stopword list of NLTK from the output candidates. The gold objects restricts the output vocabulary to the set of gold objects in the whole dataset. Similarly, the gold objects (relation-wise) restricts the output candidates to the set of gold objects for each relation in the dataset.

Factual Knowledge Probing with Co-occurrence Statistics

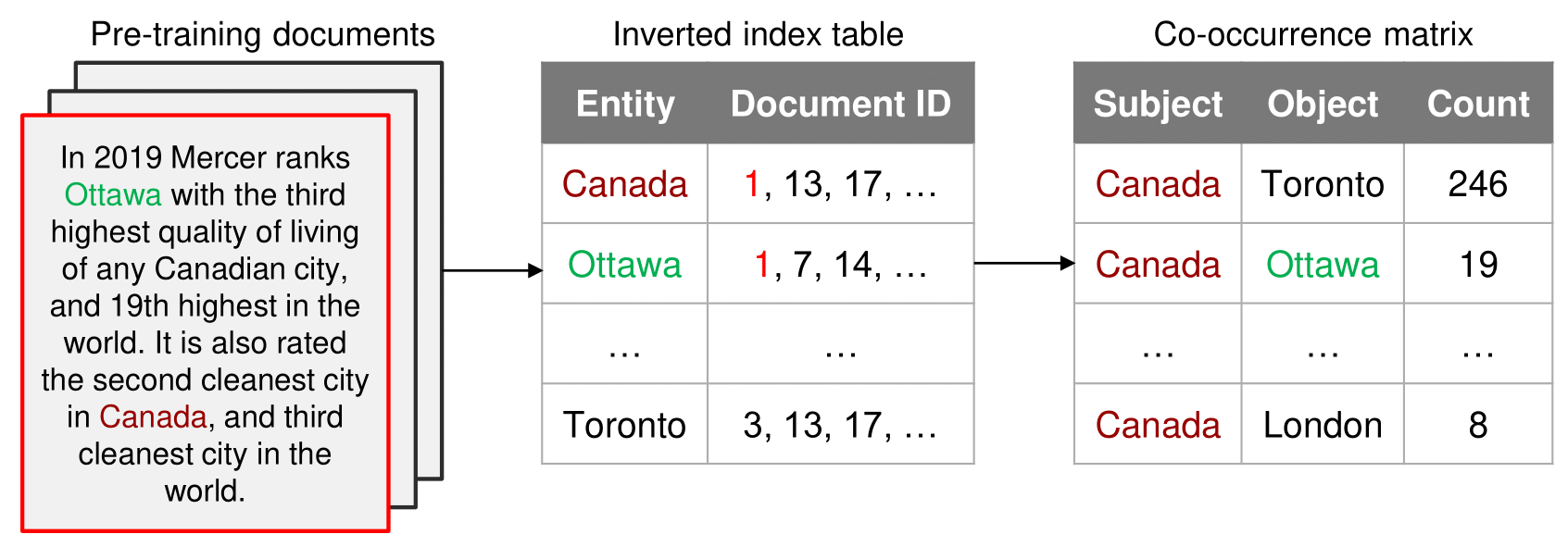

Co-occurrence Statistics

We consider the co-occurrence statistics of the pre-training dataset of target models. Specifically, we focus on subject-object co-occurrence, motivated by the concept of distant supervision, which shows that a sentence often contains the triple if it contains a subject and an object of a triple . Due to computational burdens from the large amount of documents, we count whether an entity pair appears in the same document or not, instead of using a sliding window approach.

Correlation Metrics

To analyze the correlation between factual knowledge of LLMs and co-occurrence statistics, we plot hits@1 of the target LLMs against the conditional probability of the gold object given a subject. Here, we use the co-occurrence statistics of the pre-training corpora to compute conditional probabilities. We divide the samples into multiple frequency (conditional probability) bins and report the average hits@1 for each bin.

Experimental Setup

We test open-source versions of GPT-3 with four different model sizes: GPT-Neo 125M, GPT-Neo 1.3B, GPT-Neo 2.7B, and GPT-J 6B , which are publicly available on Huggingface’s transformers . These models are pre-trained on the Pile , which is a publicly available dataset that consists of 800GB of high-quality texts from 22 different sources.

Results

Factual Knowledge Probing

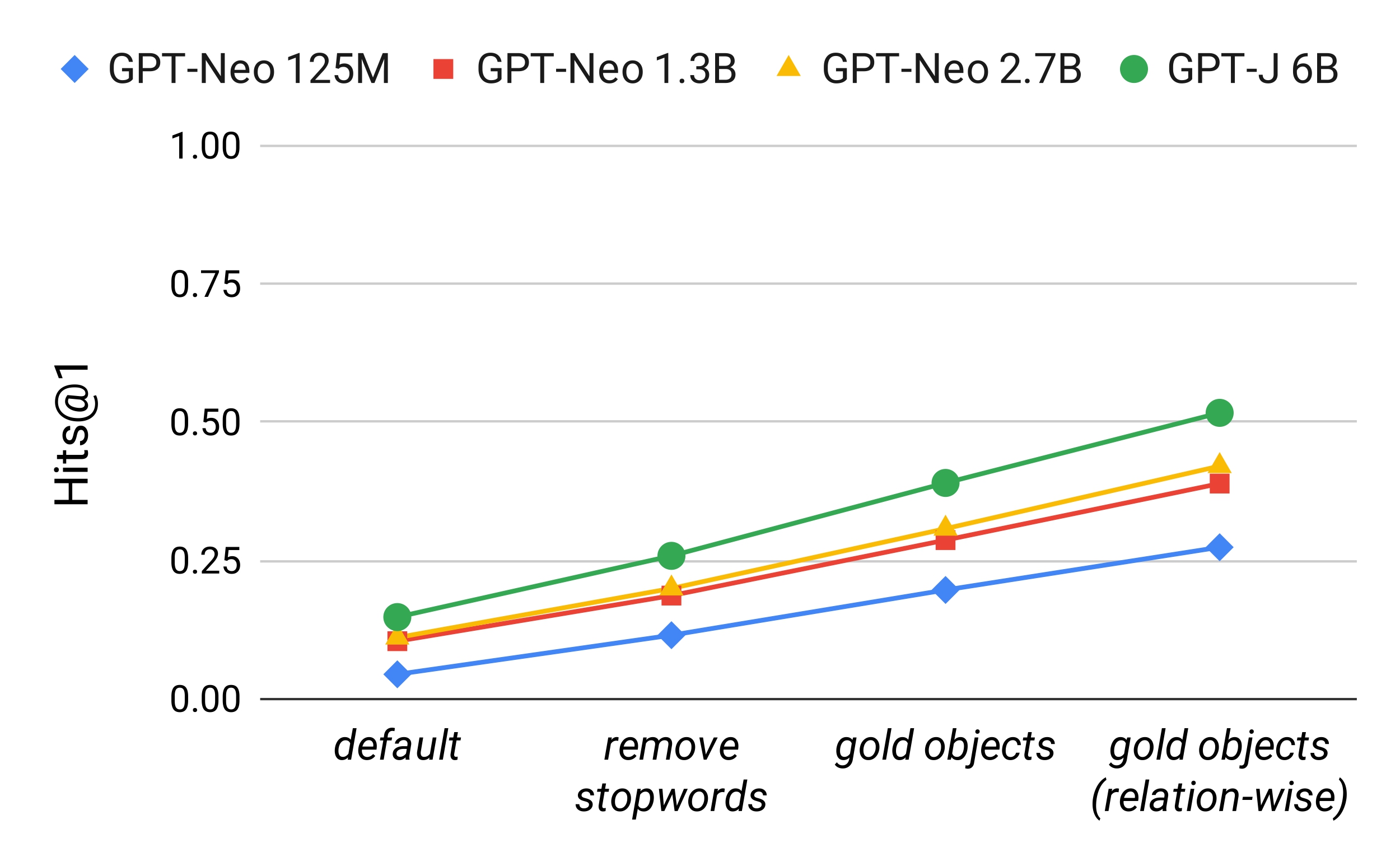

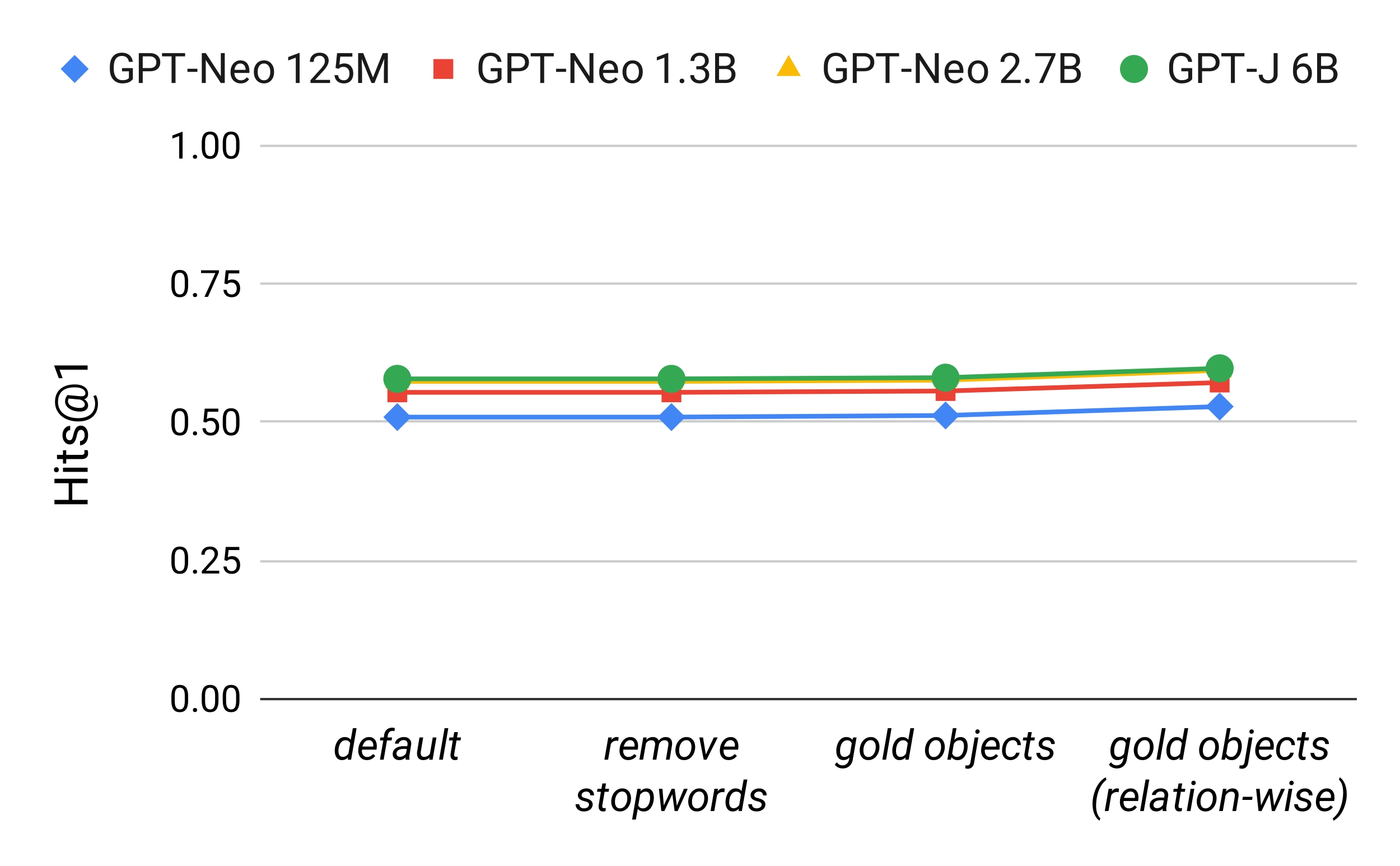

Effects of model sizes and restricted candidate sets: We plot micro-average hits@1 on the test set. (left) In the zero-shot setting, we observe that hits@1 is higher as the model is larger and as the output vocabulary is restricted to a smaller set. (right) In the finetuned setting, we observe that the effect of model sizes and restricted candidate sets is marginal. Effects of finetuning: (left->right) We observe that finetuning boosts the overall performance.

Impact of Co-occurrence

The correlation between co-occurrence statistics and factual knowledge probing accuracy: We plot hits@1 against $P_{pretrain}(obj|subj)$, the conditional probability of the gold object given a subject, on the test set in the remove stopwords setting. In both (left) zero-shot and (right) finetuned settings, we observe a strong correlation: hits@1 is lower as the co-occurrence count is lower. As a result, LLMs struggle to recall rare facts. We observe that such correlation remains despite scaling up model sizes or finetuning.

Correlational analysis of larger models: We test larger models (GPT-3.5 175B and ChatGPT) on the subset of test data in the remove stopwords setting, verifying that correlation remains despite scaling up model sizes.

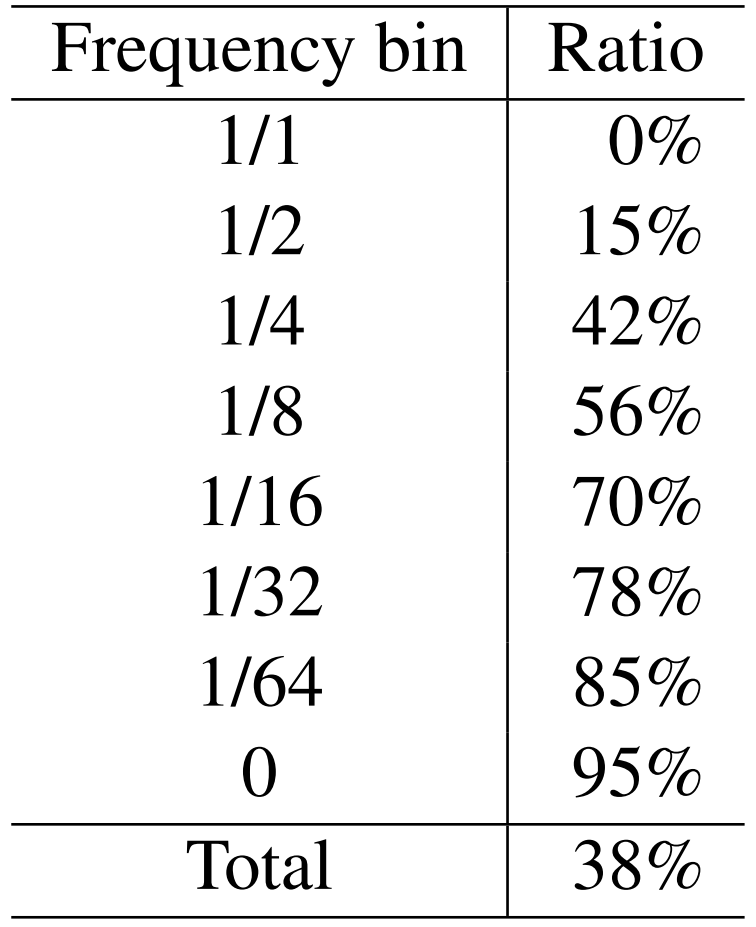

The quantitative failure analysis of GPT-J 6B, counting biased cases, in which the correct answer is overridden by a word with higher co-occurrence. The ratio of biased cases to the total failure cases is reported. We observe that a word with higher co-occurrence is preferred over the correct answer in a total of 38% of the failure cases. The results of different frequency bins show that the co-occurrence bias is more problematic when recalling rare facts.

Conclusion

- LLMs are vulnerable to the co-occurrence bias, defined as preferring frequently co-occurred words over the correct answer.

- Consequently, LLMs struggle to recall facts whose subject and object rarely co-occur in the pre-training dataset.

- Co-occurrence bias remains despite scaling up model sizes or finetuning.

- Therefore, we suggest further investigation on mitigating co-occurrence bias to ensure the reliability of language models by preventing potential harms.